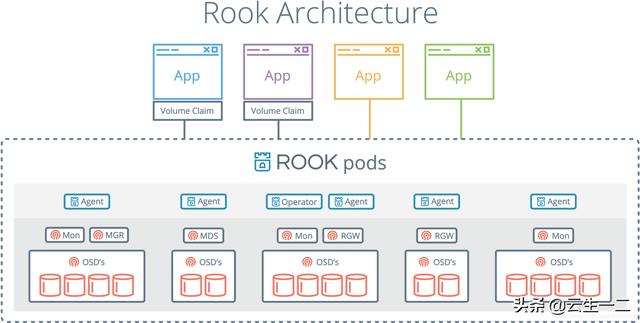

容器的持久化存储容器的持久化存储是保存容器存储状态的重要手段,存储插件会在容器里挂载一个基于网络或者其他机制的远程数据卷,使得在容器里创建的文件,实际上是保存在远程存储服务器上,或者以分布式的方式保存在多个节点上,而与当前宿主机没有任何绑定关系。这样,无论你在其他哪个宿主机上启动新的容器,都可以请求挂载指定的持久化存储卷,从而访问到数据卷里保存的内容。由于 kubernetes 本身的松耦合设计,绝大多数存储项目,比如 Ceph、GlusterFS、NFS 等,都可以为 Kubernetes 提供持久化存储能力。ceph分布式存储系统Ceph是一种高度可扩展的分布式存储解决方案,提供对象、文件和块存储。在每个存储节点上,您将找到Ceph存储对象的文件系统和Ceph OSD(对象存储守护程序)进程。在Ceph集群上,您还可以找到Ceph MON(监控)守护程序,它们确保Ceph集群保持高可用性。RookRook 是一个开源的cloud-native storage编排, 提供平台和框架;为各种存储解决方案提供平台、框架和支持,以便与云原生环境本地集成。Rook 将存储软件转变为自我管理、自我扩展和自我修复的存储服务,它通过自动化部署、引导、配置、置备、扩展、升级、迁移、灾难恢复、监控和资源管理来实现此目的。Rook 使用底层云本机容器管理、调度和编排平台提供的工具来实现它自身的功能。Rook 目前支持Ceph、NFS、Minio Object Store和CockroachDB。Rook使用Kubernetes原语使Ceph存储系统能够在Kubernetes上运行。下图说明了Ceph Rook如何与Kubernetes集成

随着Rook在Kubernetes集群中运行,Kubernetes应用程序可以挂载由Rook管理的块设备和文件系统,或者可以使用S3 / Swift API提供对象存储。Rook oprerator自动配置存储组件并监控群集,以确保存储处于可用和健康状态。Rook oprerator是一个简单的容器,具有引导和监视存储集群所需的全部功能。oprerator将启动并监控ceph monitor pods和OSDs的守护进程,它提供基本的RADOS存储。oprerator通过初始化运行服务所需的pod和其他组件来管理池,对象存储(S3 / Swift)和文件系统的CRD。oprerator将监视存储后台驻留程序以确保群集正常运行。Ceph mons将在必要时启动或故障转移,并在群集增长或缩小时进行其他调整。oprerator还将监视api服务请求的所需状态更改并应用更改。Rook oprerator还创建了Rook agent。这些agent是在每个Kubernetes节点上部署的pod。每个agent都配置一个Flexvolume插件,该插件与Kubernetes的volume controller集成在一起。处理节点上所需的所有存储操作,例如附加网络存储设备,安装卷和格式化文件系统。

部署环境

172.16.1.198 master

172.16.1.199 node1

172.16.1.200 node2

Rook version 1.0

在node节点添加一块新的磁盘 执行lsblk确保系统能够识别到新加的磁盘,rook会自动扫描系统磁盘,rook不会使用已经分区或者已经格式化的文件系统,所以确保了系统盘的安全性,搭建时也要确保新加的磁盘不要分区和格式化

下载rook

git clone https://github.com/rook/rook.git

cd rook/cluster/examples/kubernetes/ceph/

部署common组件

kubectl create -f common.yaml

部署operator组件

kubectl create -f operator.yaml

部署cluster组件

kubectl create -f cluster.yaml

稍等片刻,查看部署进度

kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-agent-7xr66 1/1 Running 0 18h

rook-ceph-agent-gmscx 1/1 Running 0 11m

rook-ceph-agent-sfxh8 1/1 Running 0 18h

rook-ceph-mgr-a-bdf6bcfc5-z5fbx 1/1 Running 0 18h

rook-ceph-mon-b-85ffff8969-dbjxd 1/1 Running 0 18h

rook-ceph-mon-c-6f9cf8584d-rvm6t 1/1 Running 0 18h

rook-ceph-mon-d-7f67c5465b-hmr7n 1/1 Running 0 7m41s

rook-ceph-operator-775cf575c5-9zjgf 1/1 Running 0 18h

rook-ceph-osd-0-84899b487b-v4nkz 1/1 Running 0 18h

rook-ceph-osd-1-845cf77594-5xdpn 1/1 Running 0 18h

rook-ceph-osd-prepare-node1-tfh8w 0/2 Completed 0 17h

rook-ceph-osd-prepare-node2-mqnxc 0/2 Completed 0 17h

rook-discover-dxkgm 1/1 Running 0 11m

rook-discover-xblpc 1/1 Running 0 18h

rook-discover-zg45m 1/1 Running 0 18h

部署StorageClass

kubectl create -f storageclass.yaml

创建pod使用pv

vim centos.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: resize-pvc

namespace: zlx

labels:

app: centos

spec:

storageClassName: rook-ceph-block

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: centos

namespace: zlx

spec:

selector:

matchLabels:

app: centos

template:

metadata:

labels:

app: centos

spec:

containers:

- name: centos

image: centos

command:

- bash

- -c

- "while true;do echo \"I'm centos\";sleep 30;done"

resources:

limits:

memory: "128Mi"

cpu: "200m"

volumeMounts:

- name: resize-pvc

mountPath: /opt/

volumes:

- name: resize-pvc

persistentVolumeClaim:

claimName: resize-pvc

kubectl create -f centos.yaml

查看pv,pvc

$ [K8sSj] kubectl get pv,pvc -n zlx

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-586fd31d-75ed-11e9-a901-26def9e195d0 1Gi RWO Delete Bound zlx/resize-pvc rook-ceph-block 57m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/resize-pvc Bound pvc-586fd31d-75ed-11e9-a901-26def9e195d0 1Gi RWO rook-ceph-block 57m

查看pod

可以看到resize-pv已经挂载到deployment

$ [K8sSj] kubectl get pod -n zlx

NAME READY STATUS RESTARTS AGE

centos-5965946c94-9bc9p 1/1 Running 0 45m

$ [K8sSj] kubectl describe deployments.apps -n zlx centos

Name: centos

Namespace: zlx

CreationTimestamp: Tue, 14 May 2019 10:09:46 0800

Labels: <none>

Annotations: deployment.kubernetes.io/revision=1

Selector: app=centos

Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=centos

Containers:

centos:

Image: centos

Port: <none>

Host Port: <none>

Command:

bash

-c

while true;do echo "I'm centos";sleep 30;done

Limits:

cpu: 200m

memory: 128Mi

Environment: <none>

Mounts:

/opt/ from resize-pvc (rw)

Volumes:

resize-pvc:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: resize-pvc

ReadOnly: false

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: centos-5965946c94 (1/1 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 46m deployment-controller Scaled up replica set centos-5965946c94 to 1

$ [K8sSj] kubectl exec -it -n zlx centos-5965946c94-9bc9p bash

[root@centos-5965946c94-9bc9p /]# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 189G 6.4G 174G 4% /

tmpfs 64M 0 64M 0% /dev

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/rbd0 1014M 34M 981M 4% /opt

/dev/vda1 189G 6.4G 174G 4% /etc/hosts

shm 64M 0 64M 0% /dev/shm

tmpfs 3.9G 12K 3.9G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 3.9G 0 3.9G 0% /proc/acpi

tmpfs 3.9G 0 3.9G 0% /proc/scsi

tmpfs 3.9G 0 3.9G 0% /sys/firmware

扩容搞扩容这块的时候在坑里很久,k8s官方文档说的是支持ceph的pv,pvc自动扩容,所以就自作聪明的认为只要,k8s和rook可以帮助我们完成一些了的扩容动作,在1.13版本中在pv中扩容,pvc,可以自动扩容,按照官方的说法重启pod后pv就应该可以识别到扩好的容量,但是这步死活操作不成功,翻看kubernetes 的issues,说是个bug,在1.14解决,故升级kubernetes集群1.14,升级后,可以解决pv正确识别容量的问题,但是进入容器,发现挂载还是没有发生改变,再次google,发现rook 的issues说rook不支持ceph块设备自动扩容,额... 心里一万个mmp,最后尝试ceph resize 扩容后resize2fs才算真正扩容成功,以下是具体操作步骤

修改storageclass

增加:allowVolumeExpansion: true

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

creationTimestamp: 2019-05-14T01:55:31Z

name: rook-ceph-block

resourceVersion: "5097626"

selfLink: /apis/storage.k8s.io/v1/storageclasses/rook-ceph-block

uid: 5b30e902-75eb-11e9-a901-26def9e195d0

parameters:

blockPool: replicapool

clusterNamespace: rook-ceph

fstype: xfs

provisioner: ceph.rook.io/block

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

扩容pvc

$ [K8sSj] kubectl edit pvc -n zlx resize-pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

pv.kubernetes.io/bind-completed: "yes"

pv.kubernetes.io/bound-by-controller: "yes"

volume.beta.kubernetes.io/storage-provisioner: ceph.rook.io/block

creationTimestamp: 2019-05-14T02:09:46Z

finalizers:

- kubernetes.io/pvc-protection

labels:

app: centos

name: resize-pvc

namespace: zlx

resourceVersion: "5075023"

selfLink: /api/v1/namespaces/zlx/persistentvolumeclaims/resize-pvc

uid: 586fd31d-75ed-11e9-a901-26def9e195d0

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi ## 1Gi--->2Gi

storageClassName: rook-ceph-block

volumeMode: Filesystem

volumeName: pvc-586fd31d-75ed-11e9-a901-26def9e195d0

status:

accessModes:

- ReadWriteOnce

capacity:

storage: 1Gi

phase: Bound

查看pv,pvc

可以看到pv大小已经是2G,pvc还是1G

$ [K8sSj] kubectl get pv,pvc -n zlx

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-586fd31d-75ed-11e9-a901-26def9e195d0 2Gi RWO Delete Bound zlx/resize-pvc rook-ceph-block 127m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/resize-pvc Bound pvc-586fd31d-75ed-11e9-a901-26def9e195d0 1Gi RWO rook-ceph-block 127m

重启pod

$ [K8sSj] kubectl delete -n zlx pod centos-5965946c94-9bc9p

pod "centos-5965946c94-9bc9p" deleted

$ [K8sSj] kubectl get pod -n zlx

NAME READY STATUS RESTARTS AGE

centos-5965946c94-cq45l 0/1 ContainerCreating 0 43s

查看pvc的大小

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

resize-pvc Bound pvc-d2ba2309-760d-11e9-ab29-fa163e753730 2Gi RWO rook-ceph-block 2m57s

进入容器查看挂载大小仍然是1G

kubectl exec -it -n zlx centos-5965946c94-sx948 sh

sh-4.2# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 189G 6.2G 174G 4% /

tmpfs 64M 0 64M 0% /dev

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/rbd0 1014M 34M 981M 4% /opt

/dev/vda1 189G 6.2G 174G 4% /etc/hosts

shm 64M 0 64M 0% /dev/shm

tmpfs 3.9G 12K 3.9G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 3.9G 0 3.9G 0% /proc/acpi

tmpfs 3.9G 0 3.9G 0% /proc/scsi

tmpfs 3.9G 0 3.9G 0% /sys/firmware

进入rook-ceph-tools 使用rbd扩容

kubectl exec -it -n rook-ceph rook-ceph-tools-b8c679f95-llvmp sh

sh-4.2# rbd -p replicapool ls

pvc-586fd31d-75ed-11e9-a901-26def9e195d0

sh-4.2# rbd -p replicapool status

rbd: image name was not specified

sh-4.2# rbd -p replicapool resize --size 3096 pvc-586fd31d-75ed-11e9-a901-26def9e195d0

Resizing image: 100% complete...done.

查看挂载pvc的pod所在节点,进入节点刷新块设备

kubectl get pod -n zlx -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

centos-5965946c94-sx948 1/1 Running 0 8m7s 192.168.0.17 master1 <none> <none>

## 如果分区格式是ext3、ext4,使用resize2fs 刷新分区

resize2fs /dev/rbd0

## 如果分区格式是xfs,使用resize2fs 会报以下错

resize2fs 1.44.1 (24-Mar-2018)

resize2fs: Bad magic number in super-block while trying to open /dev/rbd0

Couldn't find valid filesystem superblock.

## 使用xfs_growfs 刷新块设备

xfs_growfs /dev/rbd0

meta-data=/dev/rbd0 isize=512 agcount=9, agsize=31744 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1 spinodes=0 rmapbt=0

= reflink=0

data = bsize=4096 blocks=262144, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

data blocks changed from 262144 to 792576

删除集群删除使用pvc的pod 相关pvc/pv

删除rook-ceph资源

kubectl delete -f cluster.yaml

kubectl delete -f operator.yaml

kubectl delete -f common.yaml

在各个节点删除rook数据目录

rm -fr /var/lib/rook/*

在各个节点重置磁盘

#!/usr/bin/env bash

DISK="/dev/sdb"

sgdisk --zap-all $DISK

ls /dev/mapper/ceph-* | xargs -I% -- dmsetup remove %

rm -rf /dev/ceph-*

总结其实kubernetes目前如果想要使用可以自动扩容的pv、pvc rook-ceph-rbd并不是一个很好的选择,可以考虑是用heketi-glusterfs、cephfs来代替,后续这边会讲到这两种方案的搭建,届时再比较这几种方案的优缺点。,